LanceDB Cloud REST API

Introduction

API reference for LanceDB Cloud with Python, JavaScript, and Rust SDK examples.

Introduction

LanceDB Cloud REST API allows you to interact with your remote table using standard HTTP requests.LanceDB Quickstart will get you up and running in 5 minutes!

Authentication

All HTTP requests to LanceDB APIs must contain an x-api-key header that specifies a valid API key and must be encoded as JSON or Arrow RPC.Get the API Key

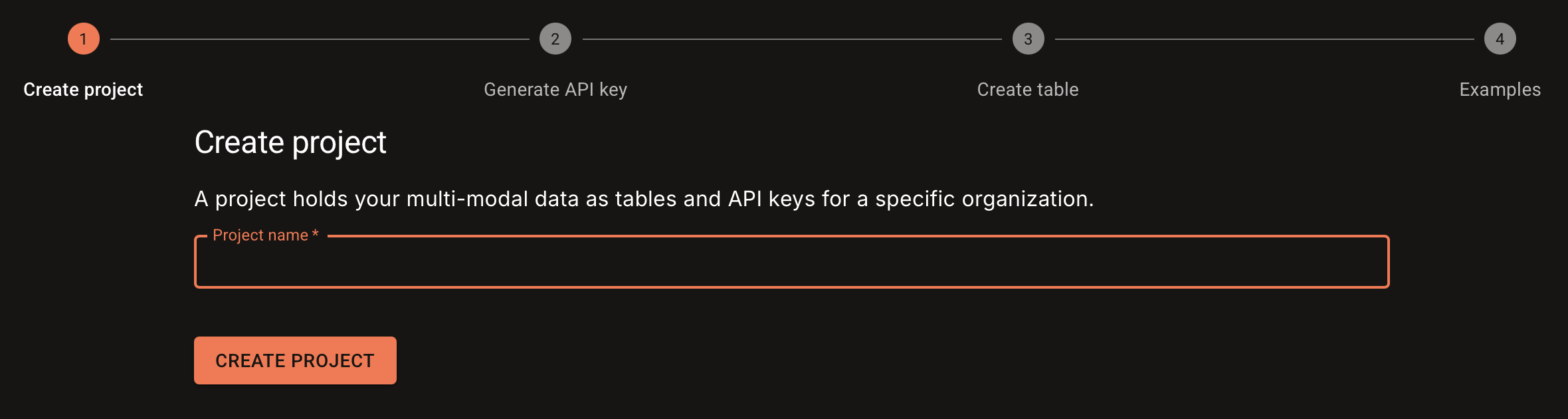

- Go to LanceDB Cloud and complete the onboarding.

- Let’s call this particular Project

embedding. - Save the API key and the project instance name:

embedding-yhs6bg.

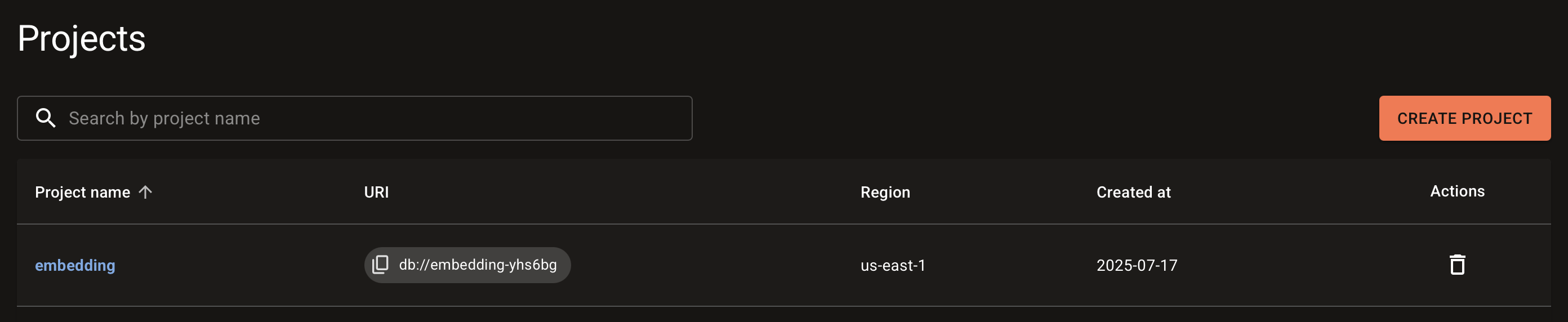

- In your terminal, check the existence of the remote Project. Specify the remote LanceDB Project

dbandregion.

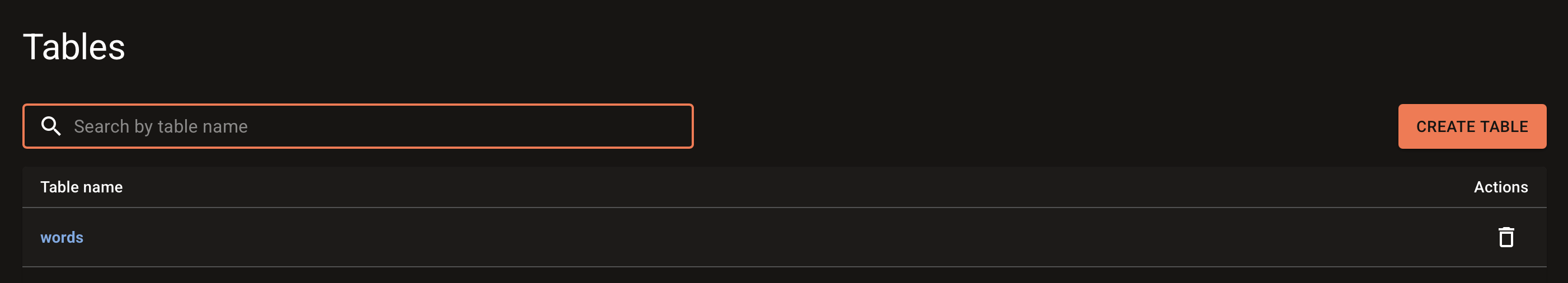

- Now, create a Table to store data. Let’s call it

words.

- the

dbisembedding-yhs6bg - the

regionisus-east-1 - the name of the table is

words.

- Now check that the Table has been created:

That’s it - you’re connected! Now, you can start adding data and querying it.

The best way to start is to try the LanceDB Quickstart or read the documentation site.

That’s it - you’re connected! Now, you can start adding data and querying it.

The best way to start is to try the LanceDB Quickstart or read the documentation site.